Greetings

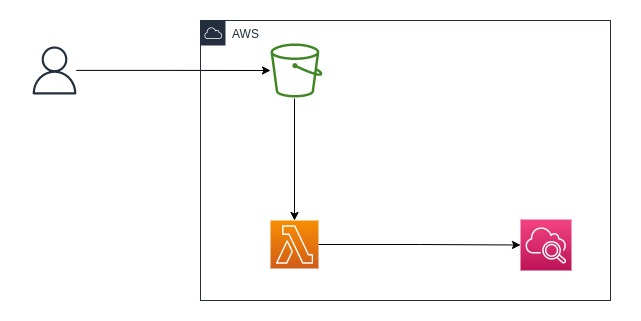

File uploading and invoking logic based on that can be a common business scenario. We can categorize a few such use cases as below.

- Image resizing such as thumbnail creation

- Read JSON file content and invoke logic

- Image processing

Let's learn how we can achieve this architecture using AWS S3 and Lambada.

S3 Events

We can enable events for a particular S3 bucket for bucket operations such as file added, and updated. When we enable this feature, we need to provide the event recipient such as a lambda function. When the bucket operations occur, the provided target will be invoked.Let's build the architecture using the serverless framework.

Initialize the project

Let's initialize the project using serverless cli.mkdir s3-lambda-trigger

cd s3-lambda-trigger

serverless create --template aws-nodejs

npm init -y

npm install @aws-sdk/client-s3 --saveCreate a folder for our functions.

mkdir src{

"name": "s3-lambda-trigger",

"version": "1.0.0",

"author": "Manjula Jayawardana",

"description": "Trigger Lambda by S3 event",

"main": "handler.js",

"type": "module",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"license": "ISC",

"dependencies": {

"@aws-sdk/client-s3": "^3.196.0"

}

}touch config.json{

"STAGE": "dev",

"REGION": "us-east-1",

"S3_BUCKET": "s3-lambda-alskdjskdfjs21323"

}Define functions and resources

Now, let's write the serverlss.yml file to define, and create our architecture.- Allow S3 bucket read permission to Lambda function

- Define the function to trigger by S3 event

- Create S3 bucket

service: s3-lambda-trigger

frameworkVersion: "3"

provider:

name: aws

runtime: nodejs16.x

stage: ${file(config.json):STAGE}

region: ${file(config.json):REGION}

environment:

REGION: ${file(config.json):REGION}

iam:

role:

statements:

- Effect: "Allow"

Action:

- "s3:GetObject"

Resource:

- "arn:aws:s3:::${file(config.json):S3_BUCKET}/*"

functions:

s3Trigger:

handler: src/s3-trigger.handler

events:

- s3:

bucket: ${file(config.json):S3_BUCKET}

event: s3:ObjectCreated:*

existing: true

rules:

- suffix: .json

resources:

Resources:

S3Bucket:

Type: AWS::S3::Bucket

Properties:

BucketName: ${file(config.json):S3_BUCKET}${file(our-file):PROPERTY}

${file(config.json):S3_BUCKET}The provider section

I have changed the runtime to node.js 16 which is the latest. Also, I'm setting the stage and region using the config.json file. There is an environment property for the region also defined so that we can use it from the Lambda function. Other than that, the IAM role is defined to allow get object access to our S3 bucket.The resources section

The S3 bucket is defined here. The bucket name is picked from the config.json file.functions section

Our Lambda handler is defined here, which is an s3-trigger with a function handler. This handler will be invoked by the S3 event ObjectCreated. I have defined a rule to make this more practical and only the .json file changed will be fired.Let's write the Lambda function

Now, it is time to write our Lambda function. First of all, I am using SDK v3 in this example which returns a stream instead of an object. Hence I have created a function to convert in the utils module (utils.js).export const streamToString = (stream) => {

const chunks = [];

return new Promise((resolve, reject) => {

stream.setEncoding("utf8");

stream.on("data", (chunk) => chunks.push(chunk));

stream.on("error", (err) => reject(err));

stream.on("end", () => resolve(chunks.join("")));

});

};"use strict";

import { S3Client, GetObjectCommand } from "@aws-sdk/client-s3";

import { streamToString } from "./utils.js";

const s3client = new S3Client({ region: process.env.REGION });

export const handler = async (event) => {

const s3Record = event.Records[0].s3;

const params = {

Bucket: s3Record.bucket.name,

Key: s3Record.object.key,

};

const { Body } = await s3client.send(new GetObjectCommand(params));

const json = await streamToString(Body);

console.log("Uploaded JSON File :: ", JSON.parse(json));

};Conclusion

In this article, we have learned how to trigger a Lambda function when an object is uploaded to an S3 bucket. By following best practices, we did it in infrastructure as code fashion using the serverless framework.Happy learning ☺

References

https://docs.aws.amazon.com/AWSJavaScriptSDK/v3/latest/clients/client-s3/index.htmlhttps://docs.aws.amazon.com/AWSJavaScriptSDK/v3/latest/clients/client-s3/classes/getobjectcommand.html

https://docs.aws.amazon.com/code-library/latest/ug/s3_example_s3_Scenario_GettingStarted_section.html

https://www.serverless.com/framework/docs/providers/aws/events/s3

https://serverless.pub/migrating-to-aws-sdk-v3/

https://github.com/aws/aws-sdk-js-v3/issues/1877

https://transang.me/modern-fetch-and-how-to-get-buffer-output-from-aws-sdk-v3-getobjectcommand/

Comments

Post a Comment